There are few tech topics today that attract more public attention than AI, Artificial Intelligence. Not even Blockchain. Not even Bitcoin. Certainly not Big Data, which has already gone past its hype cycle peak.

However, most of what is currently being said and written on AI is based on a massive misunderstanding which could eventually lead to its downfall. Again. It would not be the first time in history, though. There have been a good number of so-called “AI Winters”. Early ones dating back to the 50’s, when first successful experiments were made with Machine Translation (back then based solely on Computational Linguistics, which would later show their severe limitations). These early wins led researchers to boast that “within ten years time, the problem of machine translation will be solved”.

AI ice ages have been rife

Now look at where we are today. We are not in the 60’s anymore but have stepped well into the 21st century. And still, Machine Translation sucks. BIG time. Even Google’s translations are oftentimes more hilarious than accurate. And algorithmic innovation in Machine Translation has largely stalled, while current improvements mainly come from massive amounts of data being available and used for processing: so-called parallel corpora have been the key to better performance, where the same text exists in two languages – so machines can “learn” from it. Large step changes in algorithmic innovation, on the other hand, date back as far as the 90’s: Back then IBM introduced their 5 alignment models, kicking off Statistical Machine Translation (SMT), which would kick Computational Linguistics’ ass. But not as much as to finally solve Machine Translation.

The last notable AI Winter came over its miserable research community in the 90’s, when so-called expert systems (primarily rule-based systems that were intended to replace lawyers, physicians, etc., by giving expert advice to match that of human counterparts) failed to deliver on their expectations, sending AI into a self-inflicted slumber. Somewhat later, in the early 2000’s I myself fell in love with AI, first with “Symbolic AI” (getting my head around all sorts of logic systems, in particular Description Logics), then with its probabilistic counterpart, diving head first into Machine Learning, Data Mining, and Recommender Systems. There, I was lucky enough to make research contributions that are regarded as seminal research contributions and cited more than 5,000 times in other papers.

But that’s not the focus of this story. When I was living through my AI love affair, no one else was. It was AI midwinter. Once more. Until AI woke up again somewhere around 2010.

AI’s resurrection through Deep Learning

Deep Learning appeared to be the necromancer whose magic wand would do the resurrection trick. A technology that had been invented in the late 90’s but did not attract major attention first, owing to a) lack of computing power and b) lack of massive labeled data, which is needed to feed these algorithms and make them “learn”. Deep Learning is based on neural networks, which had kept on fascinating people because they bear resemblance to our brain’s mode of operation already in their very name (though simple forms of neural networks, the so-called “Back-propagating Neural Networks” (BNNs), have been shown to exhibit a performance that is only little better than that of Multiple Linear Regression Models known from Statistics). The nice thing about Deep Learning and its so-called CNNs, Convolutional Neural Networks, is that the designer of the neural network does not need to bother about feature engineering, which had always been a burden and roadblock to neural network performance.

Since then, AI has boomed. Remarkable steps along the way have been the following ones (just a very, very short excerpt, by no means complete):

- IBM Watson playing in the TV show “Jeopardy!” and winning against human counterparts, in February 2011

- Google’s AlphaGo mind beating two of the world-best Go players in 2016

You can read it everywhere: Elon Musk, the master of telling great stories to an audience that is craving to believe every word of his, will teach you AI is even bigger than settling down on Mars (and at the same time dangerous to mankind itself) and we should think about matters like an ethics codex for these artificial intelligence entities that will now roam the planet. Jack Ma’s words at 2018’s World Economic Forum can be interpreted in a similar fashion: “AI and robots are going to kill a lot of jobs, because in the future, these will be done by machines”. Lately I’ve read some (excuse my French …) bullshit on how to deal with emotions that these machines will have.

At the same time, less visionary people like VC dudes and consultants alike (shamefully, including BCG, the consultancy I used to work for as management consultant) demand that companies turn their gaze to AI and exploit its blessings for their business.

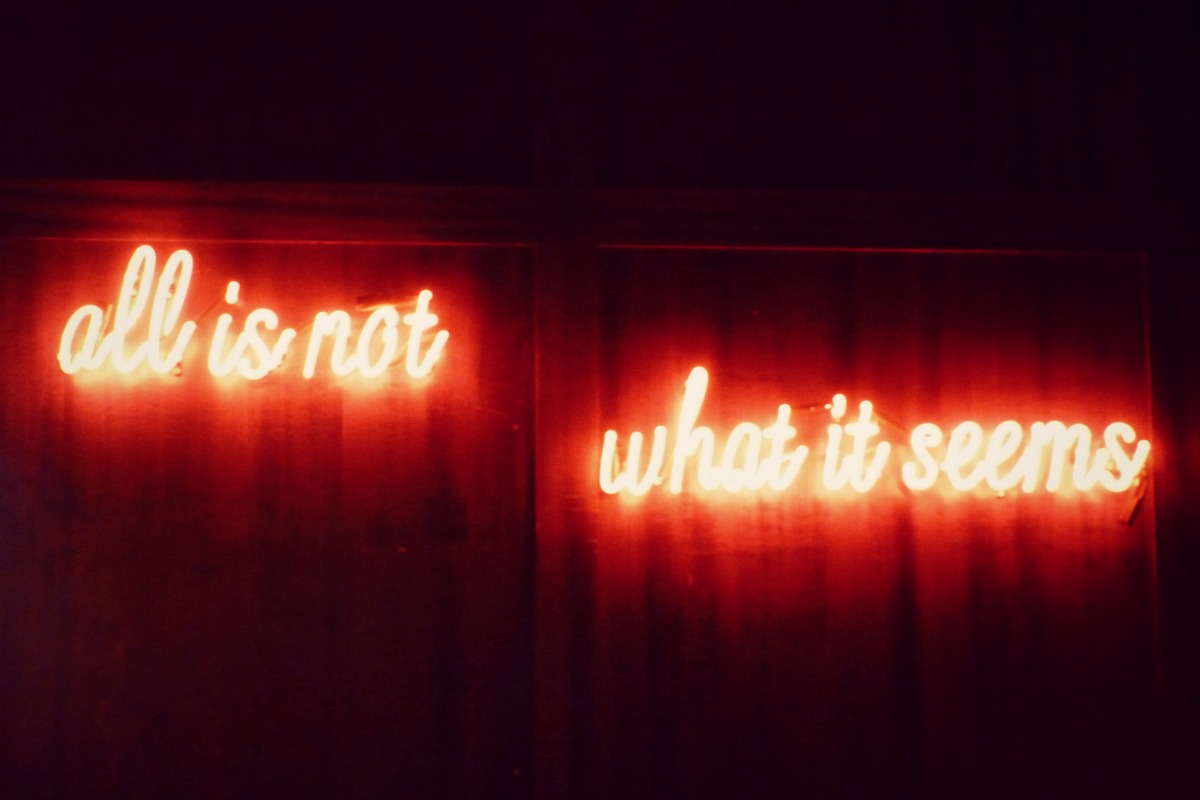

AI is not what is seems

Wait a minute. Does that mean that companies will employ HAL-like artificial intelligences, or robots, that can think and feel? Or that we’ll have lunch with a cyborg colleague in the not-so-distant future? This is exactly where the misunderstanding lies:

Artificial Intelligence is a big word. And not all the stuff that goes by the label of AI truly has to do with what we humans understand by “intelligent behavior”. The part of AI that currently gets (righteously!) a lot of limelight is the field of Machine Learning. Machine Translation, for example, is a very specialized field of Machine Learning. Chess playing is based on forms of Machine Learning, be it Supervised Learning (e.g., the above-cited Deep Learning approaches based on Convolutional Neural Networks) or Reinforcement Learning. Recognizing faces in a photo is a Machine Learning (ML) problem. Predicting whether you as a private person are worthy of a hefty mortgage credit is an ML problem, too.

Learning actually is a form of intelligent human behavior. However, what do machines actually learn in these Machine Learning settings? Is a chess playing computer already an intelligent entity? The point is, it’s not: Though IBM’s Deep Blue beat Kasparov, considered the world’s best chess player in 1996, Deep Blue is still a very dumb thing:

- It cannot do nothing else but play chess, not even play any other board game

- There is no way it can sense and interact with its immediate real-world surrounding

- It does not exhibit even the least bit of autonomous behavior

- … Not to mention cognition and consciousness

It sounds trivial and banal, but when we speak of “artificially intelligent beings” that are supposed to compete with humans on equal grounds, then these mentioned traits are indispensable. However, autonomous behavior, cognition, consciousness, sensing, moving objects, exhibiting emotions, are commonly not covered by “mainstream AI”: They go under the term of “Artificial General Intelligence” or “Strong AI”, which are much more arcane and muss less frequently heard of. In fact, advances along the road to this form of “True AI” are ridiculously small. If we ever get there at all. The Chinese Room argument is a simple thought experiment that urges that a computer program can never give a machine a mind, consciousness, or true understanding. Read the Wikipedia article I provided as link, it’s really worthwhile.

Not intelligent, but still smart

So Machine Learning is good and very helpful. But it has nothing to do with what we humans consider as intelligent behavior (and what research labels as “Artificial General Intelligence”). Nevertheless, it is really strange to see that ML did attract major popular awareness only as of now. Learning problems are everywhere and have always been:

- See manufacturing (e.g., Otis): Collecting data about machines’ running operations, e.g., elevators, and learning in which situations (aka constellations of operational parameters) these machines go out of service

- See curated shopping (e.g., Outfittery or Modomoto, both taken from the German market): Learning styles and preferences of users and making individual recommendations to new users, without needing human style consultants

- See all sorts of autonomous driving, involving pattern recognition (i.e., recognizing road signs, other cars, etc., which can also be reduced to learning problems), learning how to react in certain situations, etc.

The list is near endless. Every company has countless problems that can be solved with Machine Learning approaches. XING Events, the company that I have the pleasure to serve as CEO, is no exception: In order to find out whether an incoming lead is highly valuable (or not so much), we also put technology to use that could pass as buzzy “AI technology”. Even though it’s just simple logistic linear regression and C4.5 decision tree models – both typical representatives of Machine Learning. And these learning models will never get anywhere close to autonomous behavior. In fact, they are quite stupid and non-intelligent.

To conclude, Machine Learning is extremely valuable and helpful in making our lives better. Based on methods and techniques that are well-understood and mastered, where algorithmic complexity does not necessarily mean better performance. However, all this has nothing to do with AI the way we humans understand the term.

If this is what we expect from it … then expect the next AI Winter to be here sooner than the next real winter.